Introduction

Docker is a platform that makes it easy to create, deploy, and run applications using containers. But what exactly is a container? Why are they considered a revolution in modern software development?

In this first part of the Docker Roadmap series, we’ll break down what containers are, why they matter, and how they compare to traditional methods like virtual machines and bare-metal servers. Whether you’re a software engineer, data engineer, or DevOps practitioner, understanding containers is the first step to mastering Docker.

What is Docker?

Now that we understand containers, let’s talk about Docker specifically. Docker is a platform that makes creating, deploying, and managing containers simple and accessible.

Think of Docker as the “easy button” for containers. While Linux containers existed before Docker, they required deep system administration knowledge. Docker democratized containers by providing an ecosystem.

Docker’s Core Purpose:

- Simplify container creation through Dockerfiles (simple text recipes)

- Standardize container distribution via Docker Hub and registries

- Streamline container execution with an intuitive command-line interface

- Enable container orchestration through Docker Compose and Swarm

The Docker Ecosystem:

Docker isn’t just one tool—it’s a complete platform:

- Docker Engine: The core runtime that manages containers on your machine

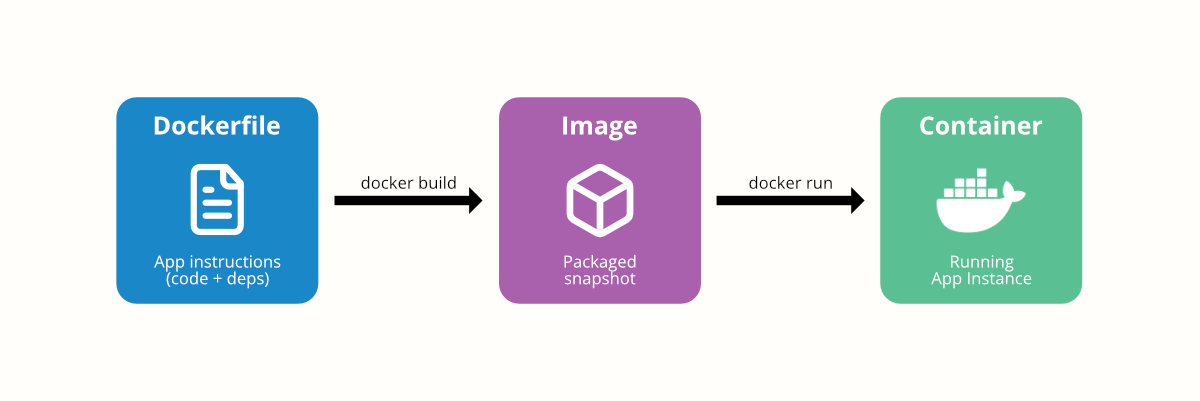

- Dockerfile: A simple text file that defines how to build your container

- Docker Images: Read-only templates used to create containers

- Docker Hub: A cloud-based registry to share container images

- Docker Compose: Tool for defining multi-container applications

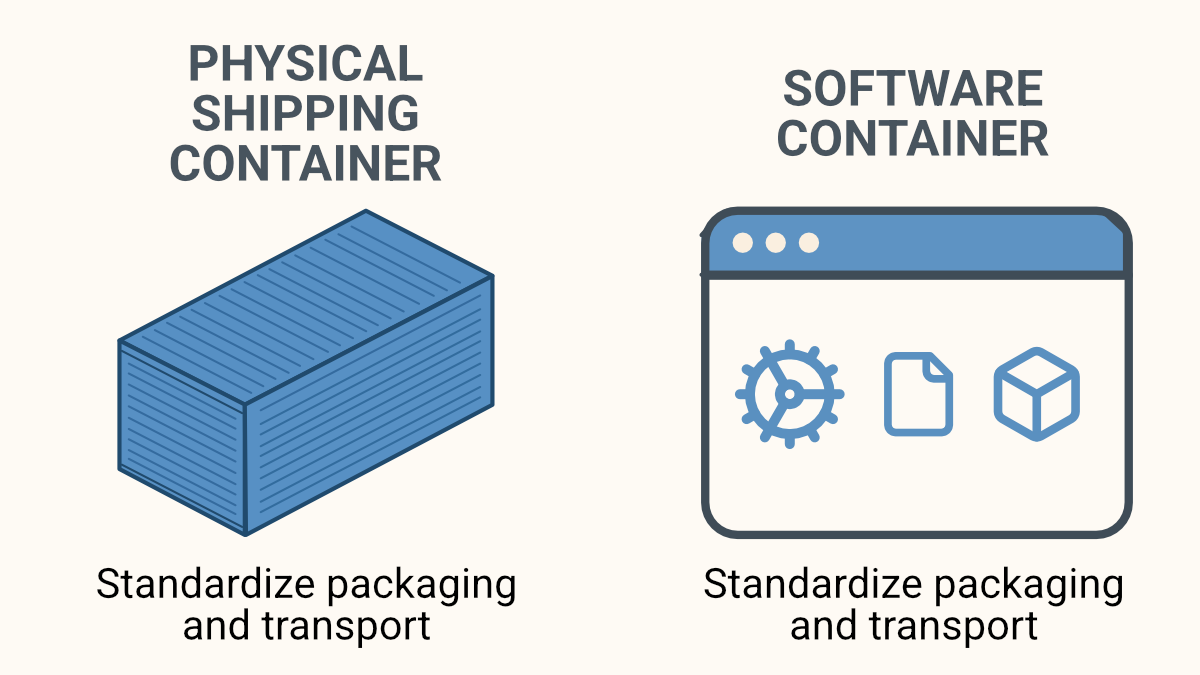

In simple terms: If containers are like shipping containers, Docker is the entire shipping infrastructure—the cranes, trucks, ports, and logistics system that makes global shipping possible.

What Are Containers?

Think of a container as a standardized shipping container for software. Just as a physical shipping container carries goods from factories to ports to trucks without needing to unload and reload, a software container wraps up an application and all its dependencies so it can run consistently anywhere.

In practice, a container is an isolated process running on a host operating system. It’s much lighter than a virtual machine because it doesn’t require a full OS per app, but still offers strong isolation and control through Linux kernel features like namespaces and cgroups.

Key Characteristics:

- Isolation: Runs in its own sandboxed environment using namespace isolation

- Portability: Runs anywhere Docker is available

- Efficiency: Shares the host kernel – faster and lighter than VMs

- Consistency: Encapsulates all dependencies

- Ephemeral: Easy to spin up, shut down, and recreate

You can also think of containers like Python virtual environments (venv) or Conda environments, but for entire applications – including binaries, configurations, system libraries, and the OS layer.

Why Do We Need Containers?

Before containers, deploying apps meant wrestling with different versions of libraries, OS configurations, and infrastructure quirks. Here are some of the problems containers solve:

“It Works on My Machine” Syndrome

An app that runs perfectly on a dev laptop crashes in production. Containers fix this by baking the environment into the image. What runs in dev will run the same in staging and prod.

Dependency Conflicts

Two apps require different versions of the same library. On a traditional server, this leads to chaos. With containers, each app brings its own dependencies.

Resource Inefficiency

VMs are heavy and take minutes to start, often requiring 2GB+ of RAM just for the operating system. Containers boot in seconds and might use only 50-100MB of RAM for the same application.

Scalable and Reproducible Deployments

Spin up 100 containers across 10 servers with a single command. CI/CD pipelines love containers because they guarantee consistency across environments.

Environment Standardization

Your local setup = staging = production. This eliminates configuration drift and environment-specific bugs.

Bare Metal vs VMs vs Containers

Let’s compare how we used to deploy apps with how we do it today.

1. Bare Metal Servers

- Run apps directly on hardware

- Fastest performance, but risky: one crash can take everything down

- Hard to isolate apps, manage resources, or update safely

- Typical RAM usage: All available system memory shared between apps

2. Virtual Machines

- Run full operating systems inside a host OS via a hypervisor

- Strong isolation, but heavy: each VM has its own kernel

- Boot time: 1-5 minutes

- Typical RAM usage: 2-4GB minimum per VM (including guest OS)

- Better than bare metal for running multiple apps safely

3. Containers

- Share the host OS kernel through namespace isolation

- Lightweight and fast

- Boot time: 1-5 seconds

- Typical RAM usage: 50-500MB per container (application only)

- Perfect for microservices, APIs, and reproducible environments

Real-World Example

Deploying PostgreSQL:

- On bare metal: Install manually, risk conflicts with other databases, hard to replicate across environments

- On a VM: Install inside a virtual OS, allocate 2GB+ RAM, wait minutes to start

- In Docker: One command, isolated environment, portable image, starts in seconds

# One-liner to run PostgreSQL

$ docker run -d -e POSTGRES_PASSWORD=secret -p 5432:5432 postgres:15Comparison Table

| Feature | Bare Metal | Virtual Machines | Containers |

|---|---|---|---|

| Resource Overhead | None | High (2-4GB+) | Low (50-500MB) |

| Isolation | None | Strong (OS-level) | Namespace-level |

| Startup Time | N/A | 1-5 minutes | 1-5 seconds |

| Portability | Poor | Good | Excellent |

| Scalability | Low | Medium | High |

| Ease of Use | Complex | Moderate | Simple |

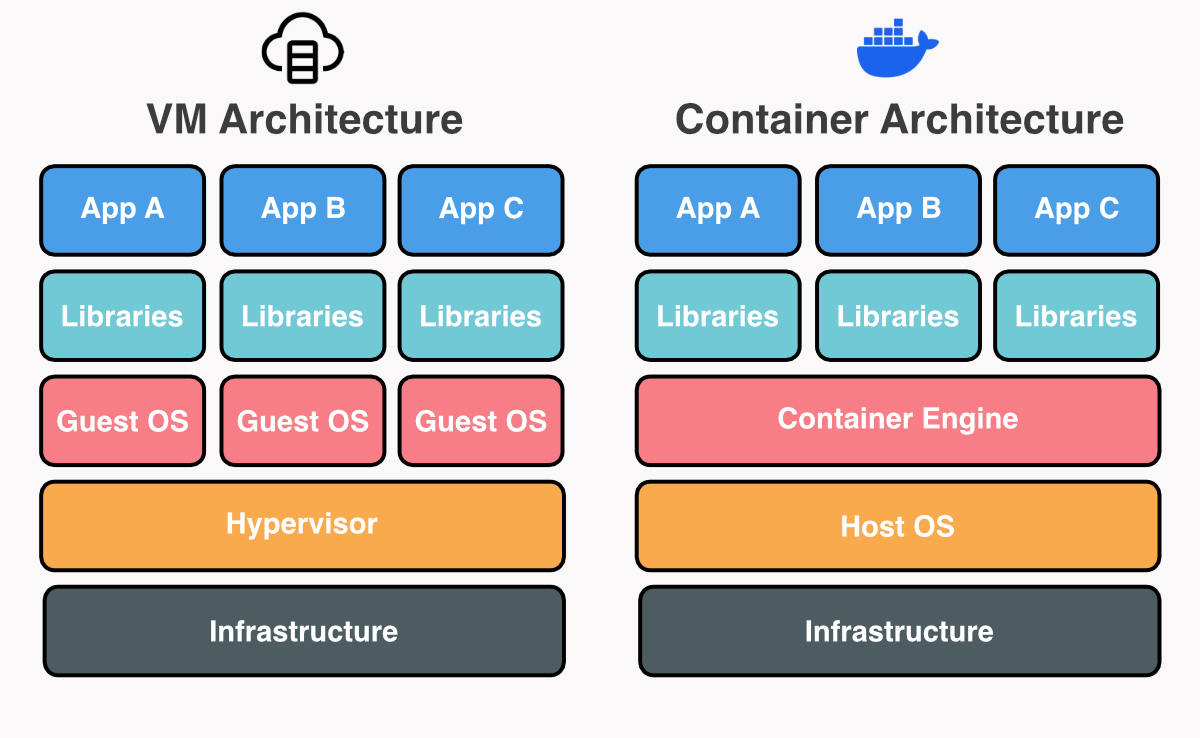

What Each Layer Means

🖥️ VM Architecture

- App A / B / C: Apps running inside separate virtual machines. Each is completely isolated with its own OS.

- Libraries: Dependencies installed independently inside each VM. No sharing between VMs — more duplication.

- Guest OS: A full operating system (like Ubuntu or Windows) inside each VM. Each copy runs in parallel, adding overhead.

- Hypervisor: The software layer that simulates hardware to run multiple VMs. Examples: VMware ESXi, VirtualBox, KVM, Hyper-V.

- Infrastructure: The physical machine (your laptop, a server, or a cloud VM). Provides CPU, memory, disk, and networking to all higher layers.

🐳 Container Architecture

- App A / B / C: Individual applications running in isolated environments (e.g., web servers, microservices, tools). Each lives in its own container.

- Libraries: Dependencies the app needs — Python packages, system libraries, runtimes. Isolated per container to avoid conflicts.

- Container Runtime: Software that manages containers: launching, isolating, and managing resources (examples: Docker Engine,containerd, runc.)

- Host OS: The main operating system running on the server (usually Linux). All containers share this OS kernel, which makes them lightweight.

Docker and the Open Container Initiative (OCI)

How Docker Changed the Game

Before Docker (2013), Linux containers (e.g., LXC) existed but were hard to use. Docker introduced:

- A simple CLI that made containers accessible to developers

- The Dockerfile format for reproducible builds

- Docker Hub: a public image registry

- Docker Engine: a runtime that abstracted away complexity

Docker made containers accessible to everyone, not just systems engineers. Notably, Docker Inc. later donated their container runtime to the open-source community, creating runc, which became the foundation for the industry standard.

The Role of the OCI

With container adoption booming, the Open Container Initiative (OCI) was formed to standardize:

- Image format: How container images are built and structured

- Runtime: How containers should execute

- Distribution: How registries store and share images

Thanks to OCI standards, Docker images are portable across other runtimes like:

containerd(used by Kubernetes)Podman(Red Hat’s daemonless alternative)runc(low-level runtime)CRI-O(Kubernetes-focused runtime)

This ensures long-term compatibility and gives you freedom to choose tools.

Docker Today

Docker is now a modular ecosystem of tools:

- Docker Engine: The core runtime and API

- Docker Desktop: GUI and local development environment for Mac/Windows

- Docker Compose: Multi-container workflows via YAML files

- Docker Hub: Public/private image registry with millions of images

- Docker Swarm: Built-in orchestrator (though Kubernetes dominates production)

Example: Building Your First Container

Let’s look at a simple Dockerfile for a Python web application:

# Use official Python image as base (includes Python + pip)

FROM python:3.11-slim

# Set working directory inside container

WORKDIR /app

# Copy requirements file first (for better caching)

COPY requirements.txt .

# Install Python dependencies

RUN pip install -r requirements.txt

# Copy application code

COPY . .

# Expose port 5000 to outside world

EXPOSE 5000

# Define startup command

CMD ["python", "app.py"]What each line does:

FROM: Start with a pre-built Python environmentWORKDIR: Set/appas our working directory inside the containerCOPY requirements.txt: Copy dependency list first (Docker caches this layer)RUN pip install: Install Python packages inside the containerCOPY . .: Copy all application code into the containerEXPOSE: Document which port the app uses (doesn’t actually publish it)CMD: Tell Docker how to start our application

To build and run it:

# Build the container image

docker build -t my-python-app .

# Run the container, mapping port 5000

docker run -d -p 5000:5000 my-python-appYou just containerized a Python app. You can run it on your laptop, in CI, or in the cloud — exactly the same way every time.

Here is the Docker Build Process Flow you’ve just built:

Common Misconceptions and Pitfalls

”Containers = VMs”

Not really. Containers don’t emulate full hardware or run separate operating systems. They use the host kernel with namespace isolation tricks to create the illusion of separation.

”Containers are always secure”

Containers provide process isolation, but they’re not automatically secure. Common security mistakes include:

- Running processes as root inside containers

- Not scanning images for vulnerabilities

- Sharing sensitive host directories

- Using outdated base images with known CVEs

Follow the principle of least privilege and use tools like docker scan to check for vulnerabilities.

”Containers are hard to learn”

The basics are actually quite simple thanks to Docker’s user-friendly interface. Most developers can be productive with just a few commands.

”My data will disappear”

By default, yes! Containers are ephemeral. Common beginner mistakes:

- Not understanding that containers are stateless by design

- Forgetting to use volumes for persistent data

- Not exposing ports correctly with

-pflag - Assuming containers will keep running after system restart (use

--restartpolicy)

Try It Yourself: Hello Docker

# Run an nginx web server

docker run -d -p 8080:80 nginx

# Visit http://localhost:8080 in your browser

# You should see the nginx welcome page!

# See running containers

docker ps

# Stop the container

docker stop <container-id>Boom — you’re running production-grade software in a container! In just one command, you’ve deployed a web server that would normally require installing packages, configuring files, and managing services.

Conclusion

Containers aren’t just hype. They solve real deployment headaches that have plagued developers for decades. By providing consistency, speed, and scalability, containers have become the foundation of modern software delivery.

The key benefits we’ve covered:

- Consistency: “It works on my machine” becomes “it works everywhere”

- Efficiency: Seconds to start vs minutes for VMs, using a fraction of the resources

- Portability: Write once, run anywhere Docker is available

- Scalability: Spin up hundreds of instances effortlessly

Understanding what containers are and how Docker makes them accessible is your first step toward mastering modern deployment practices.

In the next article, we’ll dive into the Linux magic that powers Docker: namespaces, cgroups, and union filesystems. If you’ve ever wondered how Docker achieves this isolation, performance, and portability at the kernel level — don’t miss it. We’ll explore the actual mechanisms that make containers possible and why they’re so much more efficient than traditional virtualization.

Link to the Docker Under the Hood: The Linux Magic Behind Containers next article!

Ready to get your hands dirty? Install Docker Desktop and try the examples above. The best way to understand containers is to build and run them yourself!